A recent TikTok video has gone viral, not just for its content but for the warning that appeared above it.

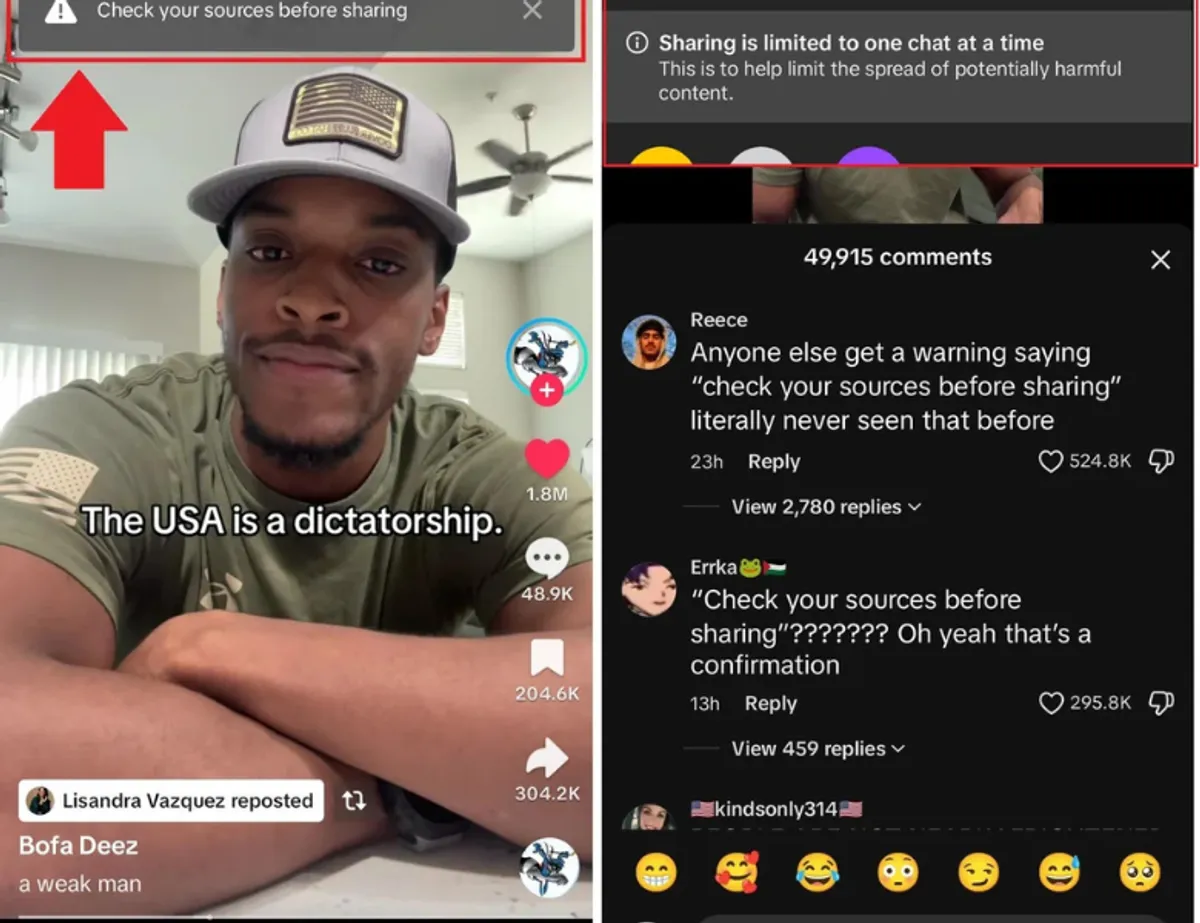

In the clip, a man with the username @longlivejudah shares his political opinion with this caption summarizing his beliefs: “The USA is a dictatorship.” As with many opinionated political takes on social media, the comments section exploded. But what really caught users off guard was TikTok’s pop-up message: “Check your sources before sharing.” Various users on the TikTok platform have come out to say that they have never seen a warning dialogue like this before until they saw it on this specific post.

Even more notable, if you tried to share or save the video, another bigger pop up would show saying: “Consider the accuracy of what you’re sharing. Remember to check the information in this video with reliable sources. Users were restricted from sharing the post with more than one chat at a time due to its “potentially harmful content.”

Let’s unpack what this means and why it really matters.

The New Age of Soft Censorship

TikTok’s warning flag isn’t a direct removal or a fact-check label, but it sends a clear signal: proceed with caution. The platform didn’t say the video was false. It didn’t say it violated community guidelines. Instead, it quietly discouraged sharing by subtly casting doubt on its credibility.

This kind of flag isn’t just about accuracy. It’s about suppressing dissent. It’s about discouraging criticism of the status quo, especially when that criticism challenges systems of power. In a time when actual disinformation is flooding our feeds (lies about elections, vaccines, climate change), it’s telling that a personal opinion about creeping authoritarianism is the content getting flagged.

This is what we at Misinformant refer to as soft censorship. It nudges users away from content under the guise of promoting critical thinking while quietly stifling the conversation. The problem is that it often lacks transparency and can disproportionately affect political and social commentary.

Whose Truth Gets Flagged?

The message “check your sources” seems reasonable until you realize it’s being triggered by an opinion, not a verifiable claim. The man in the video isn’t citing fake statistics or spreading conspiracy theories. He’s expressing a perspective that may be provocative or uncomfortable, but still falls within the bounds of public discourse.

So why the warning?

It raises bigger questions:

- Who decides what’s potentially harmful?

- Why are certain viewpoints flagged while others pass without scrutiny?

- And if these decisions are driven by algorithms, what biases are baked in?

Platforms like TikTok are under enormous pressure to combat misinformation. But in doing so, there’s a fine line between promoting media literacy and chilling dissent. If we’re going to encourage people to verify sources, we should also demand clarity on what triggered the warning in the first place.

At Misinformant, we believe real trust comes from transparency. That means:

- Showing the basis for warnings

- Clearly distinguishing between factual claims and personal opinions

- Empowering users to explore why a piece of content might be flagged, rather than stopping them from seeing or sharing it

Why This Matters More Than Ever

In this election year, with geopolitical tensions and declining trust in institutions, moments like this are more than just internet drama. They’re reminders that our digital public squares are being quietly policed, and not always in ways we can see or understand.

That’s why we’re building Misinformant. It’s a platform that doesn’t just label something as true or false, but helps you trace the origin of claims, understand the context, and make up your own mind with real data.

Warnings should not replace reasoning. Censorship, even when subtle, should never take the place of truth.